Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | 29 | 30 |

Tags

- 시각화

- 파이썬

- Visualization

- matplotlib

- 한빛미디어서평단

- MySQL

- 딥러닝

- Blog

- 리눅스

- 한빛미디어

- 텐서플로

- 티스토리

- Python

- Google Analytics

- Tistory

- 독후감

- Linux

- python visualization

- 매틀랩

- MATLAB

- tensorflow

- Pandas

- 블로그

- 파이썬 시각화

- 서평단

- 월간결산

- 서평

- 통계학

- Ga

- SQL

Archives

- Today

- Total

pbj0812의 코딩 일기

[TensorFlow] Natural Language Processing in TensorFlow 본문

인공지능 & 머신러닝/TensorFlow

[TensorFlow] Natural Language Processing in TensorFlow

pbj0812 2020. 4. 1. 02:460. 목표

- tensorflow 자격증 취득을 위한 준비

- 수료증

1. Week1

- Sentiment in text

- Source of found common stopwords

- Sarcasm in News Headlines Dataset by Reshabh Misra

import csv

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

stopwords = [ "a", "about", "above", "after", "again", "against", "all", "am", "an", "and", "any", "are", "as", "at", "be", "because", "been", "before", "being", "below", "between", "both", "but", "by", "could", "did", "do", "does", "doing", "down", "during", "each", "few", "for", "from", "further", "had", "has", "have", "having", "he", "he'd", "he'll", "he's", "her", "here", "here's", "hers", "herself", "him", "himself", "his", "how", "how's", "i", "i'd", "i'll", "i'm", "i've", "if", "in", "into", "is", "it", "it's", "its", "itself", "let's", "me", "more", "most", "my", "myself", "nor", "of", "on", "once", "only", "or", "other", "ought", "our", "ours", "ourselves", "out", "over", "own", "same", "she", "she'd", "she'll", "she's", "should", "so", "some", "such", "than", "that", "that's", "the", "their", "theirs", "them", "themselves", "then", "there", "there's", "these", "they", "they'd", "they'll", "they're", "they've", "this", "those", "through", "to", "too", "under", "until", "up", "very", "was", "we", "we'd", "we'll", "we're", "we've", "were", "what", "what's", "when", "when's", "where", "where's", "which", "while", "who", "who's", "whom", "why", "why's", "with", "would", "you", "you'd", "you'll", "you're", "you've", "your", "yours", "yourself", "yourselves" ]

sentences = []

labels = []

with open("../dataset/bbc-text.csv", 'r') as csvfile:

reader = csv.reader(csvfile, delimiter = ',')

next(reader)

for row in reader:

labels.append(row[0])

sentence = row[1]

for word in stopwords:

token = " " + word + " "

sentence = sentence.replace(token, " ")

sentence = sentence.replace(" ", " ")

sentences.append(sentence)

print(len(sentences))

print(sentences[0])

tokenizer = Tokenizer(oov_token = "<OOV>")

tokenizer.fit_on_texts(sentences)

word_index = tokenizer.word_index

print(len(word_index))

sequences = tokenizer.texts_to_sequences(sentences)

padded = pad_sequences(sequences, padding = 'post')

print(padded[0])

print(padded.shape)

label_tokenizer = Tokenizer()

label_tokenizer.fit_on_texts(labels)

label_word_index = label_tokenizer.word_index

label_seq = label_tokenizer.texts_to_sequences(labels)

print(label_seq)

print(label_word_index)2. Week2

- 예제코드

- tensorflow_datasets 설치

conda install tensorflow-datasetsimport tensorflow as tf

tf.enable_eager_execution() #이걸 써야 나중에 str(s.numpy()) 실행가능

import tensorflow_datasets as tfds

imdb, info = tfds.load("imdb_reviews", with_info = True, as_supervised = True)

import numpy as np

train_data, test_data = imdb['train'], imdb['test']

training_sentences = []

training_labels = []

testing_sentences = []

testing_labels = []

for s,l in train_data:

training_sentences.append(str(s.numpy()))

training_labels.append(l.numpy())

for s,l in test_data:

testing_sentences.append(str(s.numpy()))

testing_labels.append(l.numpy())

training_labels_final = np.array(training_labels)

testing_labels_final = np.array(testing_labels)

vocab_size = 10000

embedding_dim = 16

max_length = 120

trunc_type = 'post'

oov_tok = "<OOV>"

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

tokenizer = Tokenizer(num_words = vocab_size, oov_token = oov_tok)

tokenizer.fit_on_texts(training_sentences)

word_index = tokenizer.word_index

sequences = tokenizer.texts_to_sequences(training_sentences)

padded = pad_sequences(sequences, maxlen=max_length, truncating = trunc_type)

testing_sequences = tokenizer.texts_to_sequences(testing_sentences)

testing_padded = pad_sequences(testing_sequences, maxlen = max_length)

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

print(decode_review(padded[1]))

print(training_sentences[1])

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim, input_length = max_length),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(6, activation = 'relu'),

tf.keras.layers.Dense(1, activation = 'sigmoid')

])

model.compile(loss = 'binary_crossentropy', optimizer = 'adam', metrics = ['accuracy'])

model.summary()

num_epochs = 10

model.fit(padded, training_labels_final, epochs = num_epochs, validation_data = (testing_padded, testing_labels_final))

e = model.layers[0]

weights = e.get_weights()[0]

print(weights.shape)

import io

out_v = io.open('vecs.tsv', 'w', encoding = 'utf-8')

out_m = io.open('meta.tsv', 'w', encoding = 'utf-8')

for word_num in range(1, vocab_size):

word = reverse_word_index[word_num]

embeddings = weights[word_num]

out_m.write(word + "\n")

out_v.write('\t'.join([str(x) for x in embeddings]) + "\n")

out_v.close()

out_m.close()

sentence = "I really think this is amazing. honest."

sequence = tokenizer.texts_to_sequences(sentence)

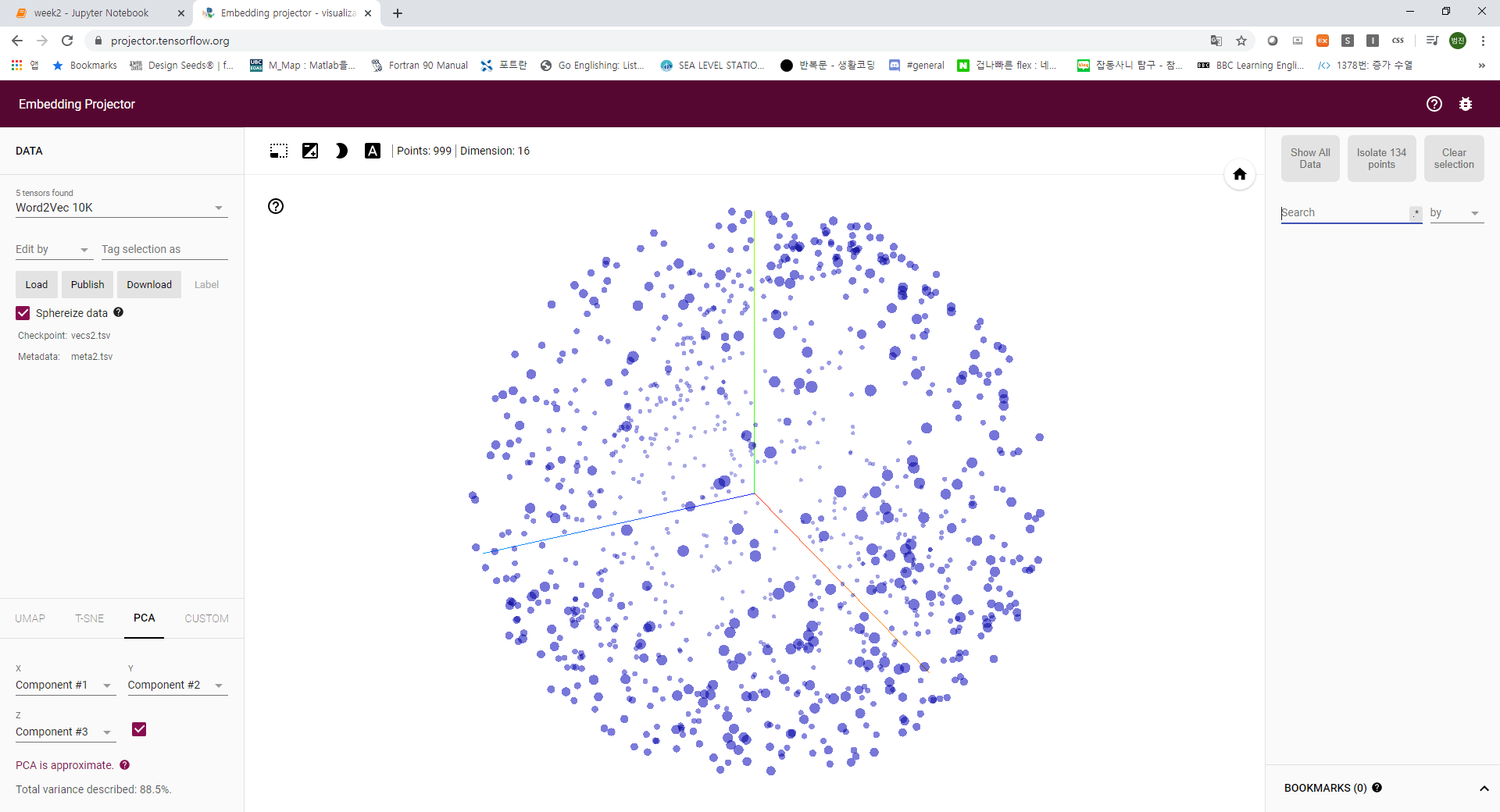

print(sequence)- 결과 파일을 projector.tensorflow.org의 TensorFlow Embedding Projector 의 load data로 시각화 가능

- NLP에서 hyper parameter 조정은 필수적(값에 따라 그 결과가 많은 차이를 보임)

import csv

import tensorflow as tf

import numpy as np

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

vocab_size = 1000

embedding_dim = 16

max_length = 120

trunc_type = 'post'

padding_type = 'post'

oov_tok = "<OOV>"

training_portion = .8

sentences = []

labels = []

stopwords = [ "a", "about", "above", "after", "again", "against", "all", "am", "an", "and", "any", "are", "as", "at", "be", "because", "been", "before", "being", "below", "between", "both", "but", "by", "could", "did", "do", "does", "doing", "down", "during", "each", "few", "for", "from", "further", "had", "has", "have", "having", "he", "he'd", "he'll", "he's", "her", "here", "here's", "hers", "herself", "him", "himself", "his", "how", "how's", "i", "i'd", "i'll", "i'm", "i've", "if", "in", "into", "is", "it", "it's", "its", "itself", "let's", "me", "more", "most", "my", "myself", "nor", "of", "on", "once", "only", "or", "other", "ought", "our", "ours", "ourselves", "out", "over", "own", "same", "she", "she'd", "she'll", "she's", "should", "so", "some", "such", "than", "that", "that's", "the", "their", "theirs", "them", "themselves", "then", "there", "there's", "these", "they", "they'd", "they'll", "they're", "they've", "this", "those", "through", "to", "too", "under", "until", "up", "very", "was", "we", "we'd", "we'll", "we're", "we've", "were", "what", "what's", "when", "when's", "where", "where's", "which", "while", "who", "who's", "whom", "why", "why's", "with", "would", "you", "you'd", "you'll", "you're", "you've", "your", "yours", "yourself", "yourselves" ]

print(len(stopwords))

with open("../dataset/bbc-text.csv", 'r') as csvfile:

reader = csv.reader(csvfile, delimiter = ',')

next(reader)

for row in reader:

labels.append(row[0])

sentence = row[1]

for word in stopwords:

token = " " + word + " "

sentence = sentence.replace(token, " ")

sentences.append(sentence)

train_size = int(len(sentences) * training_portion)

train_sentences = sentences[:train_size]

train_labels = labels[:train_size]

validation_sentences = sentences[train_size:]

validation_labels = labels[train_size:]

tokenizer = Tokenizer(num_words = vocab_size, oov_token=oov_tok)

tokenizer.fit_on_texts(train_sentences)

word_index = tokenizer.word_index

train_sequences = tokenizer.texts_to_sequences(train_sentences)

train_padded = pad_sequences(train_sequences, padding=padding_type, maxlen=max_length)

validation_sequences = tokenizer.texts_to_sequences(validation_sentences)

validation_padded = pad_sequences(validation_sequences, padding=padding_type, maxlen=max_length)

label_tokenizer = Tokenizer()

label_tokenizer.fit_on_texts(labels)

training_label_seq = np.array(label_tokenizer.texts_to_sequences(train_labels))

validation_label_seq = np.array(label_tokenizer.texts_to_sequences(validation_labels))

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size, embedding_dim, input_length=max_length),

tf.keras.layers.GlobalAveragePooling1D(),

tf.keras.layers.Dense(24, activation = 'relu'),

tf.keras.layers.Dense(6, activation = 'softmax')

])

model.compile(loss = 'sparse_categorical_crossentropy', optimizer = 'adam',metrics = ['accuracy'])

model.summary()

num_epochs = 30

history = model.fit(train_padded, training_label_seq, epochs=num_epochs, validation_data=(validation_padded, validation_label_seq), verbose = 2)

import matplotlib.pyplot as plt

def plot_graphs(history, string):

plt.plot(history.history[string])

plt.plot(history.history['val_' + string])

plt.xlabel("Epochs")

plt.ylabel(string)

plt.legend([string, 'val_' + string])

plt.show()

plot_graphs(history, "acc")

plot_graphs(history, "loss")

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

def decode_sentence(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

e = model.layers[0]

weights = e.get_weights()[0]

print(weights.shape)

import io

out_v = io.open('vecs2.tsv', 'w', encoding='utf-8')

out_m = io.open('meta2.tsv', 'w', encoding='utf-8')

for word_num in range(1, vocab_size):

word = reverse_word_index[word_num]

embeddings = weights[word_num]

out_m.write(word + "\n")

out_v.write('\t'.join([str(x) for x in embeddings]) + "\n")

out_v.close()

out_m.close()

3. Week3

- LSTM

- Sequence Models 코세라 강의(Andrew Ng)

- IMDB Subwords 8K with Single Layer LSTM

- IMDB Subwords 8K with Multi Layer LSTM

- IMDB Subwords 8K with 1D Convolutional Layer

- Sarcasm with Bidirectional LSTM

- Sarcasm with 1D Convolutional Layer

- IMDB Reviews with GRU (and optional LSTM and Conv1D)

- 따라하기(tensorfow_datasets와 tensorflow의 버전 관리 필요)

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow_datasets as tfds

import tensorflow as tf

print(tf.__version__)

# Get the data

dataset, info = tfds.load('imdb_reviews/subwords8k', with_info=True, as_supervised=True)

train_dataset, test_dataset = dataset['train'], dataset['test']

tokenizer = info.features['text'].encoder

BUFFER_SIZE = 10000

BATCH_SIZE = 64

train_dataset = train_dataset.shuffle(BUFFER_SIZE)

train_dataset = train_dataset.padded_batch(BATCH_SIZE, train_dataset.output_shapes)

test_dataset = test_dataset.padded_batch(BATCH_SIZE, test_dataset.output_shapes)

model = tf.keras.Sequential([

tf.keras.layers.Embedding(tokenizer.vocab_size, 64),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(64, return_sequences=True)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32)),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.summary()

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

NUM_EPOCHS = 10

history = model.fit(train_dataset, epochs=NUM_EPOCHS, validation_data=test_dataset)

import matplotlib.pyplot as plt

def plot_graphs(history, string):

plt.plot(history.history[string])

plt.plot(history.history['val_'+string])

plt.xlabel("Epochs")

plt.ylabel(string)

plt.legend([string, 'val_'+string])

plt.show()

plot_graphs(history, 'accuracy')

plot_graphs(history, 'loss')- Glove

- 문제(코드 복붙)

import json

import tensorflow as tf

import csv

import random

import numpy as np

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.utils import to_categorical

from tensorflow.keras import regularizers

embedding_dim = 100

max_length = 16

trunc_type='post'

padding_type='post'

oov_tok = "<OOV>"

training_size=160000

test_portion=.1

corpus = []

# Note that I cleaned the Stanford dataset to remove LATIN1 encoding to make it easier for Python CSV reader

# You can do that yourself with:

# iconv -f LATIN1 -t UTF8 training.1600000.processed.noemoticon.csv -o training_cleaned.csv

# I then hosted it on my site to make it easier to use in this notebook

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/training_cleaned.csv \

-O /tmp/training_cleaned.csv

num_sentences = 0

with open("/tmp/training_cleaned.csv") as csvfile:

reader = csv.reader(csvfile, delimiter=',')

for row in reader:

list_item=[]

list_item.append(row[5])

this_label=row[0]

if this_label=='0':

list_item.append(0)

else:

list_item.append(1)

num_sentences = num_sentences + 1

corpus.append(list_item)

print(num_sentences)

print(len(corpus))

print(corpus[1])

# Expected Output:

# 1600000

# 1600000

# ["is upset that he can't update his Facebook by texting it... and might cry as a result School today also. Blah!", 0]

sentences=[]

labels=[]

random.shuffle(corpus)

for x in range(training_size):

sentences.append(corpus[x][0])

labels.append(corpus[x][1])

tokenizer = Tokenizer()

tokenizer.fit_on_texts(sentences)

word_index = tokenizer.word_index

vocab_size=len(word_index)

sequences = tokenizer.texts_to_sequences(sentences)

padded = pad_sequences(sequences, maxlen=max_length, padding=padding_type, truncating=trunc_type)

split = int(test_portion * training_size)

test_sequences = padded[0:split]

training_sequences = padded[split:training_size]

test_labels = labels[0:split]

training_labels = labels[split:training_size]

print(vocab_size)

print(word_index['i'])

# Expected Output

# 138858

# 1

# Note this is the 100 dimension version of GloVe from Stanford

# I unzipped and hosted it on my site to make this notebook easier

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/glove.6B.100d.txt \

-O /tmp/glove.6B.100d.txt

embeddings_index = {};

with open('/tmp/glove.6B.100d.txt') as f:

for line in f:

values = line.split();

word = values[0];

coefs = np.asarray(values[1:], dtype='float32');

embeddings_index[word] = coefs;

embeddings_matrix = np.zeros((vocab_size+1, embedding_dim));

for word, i in word_index.items():

embedding_vector = embeddings_index.get(word);

if embedding_vector is not None:

embeddings_matrix[i] = embedding_vector;

print(len(embeddings_matrix))

# Expected Output

# 138859

model = tf.keras.Sequential([

tf.keras.layers.Embedding(vocab_size+1, embedding_dim, input_length=max_length, weights=[embeddings_matrix], trainable=False),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Conv1D(64, 5, activation='relu'),

tf.keras.layers.MaxPooling1D(pool_size=4),

tf.keras.layers.LSTM(64),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy',optimizer='adam',metrics=['accuracy'])

model.summary()

num_epochs = 50

history = model.fit(training_sequences, training_labels, epochs=num_epochs, validation_data=(test_sequences, test_labels), verbose=2)

print("Training Complete")

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

#-----------------------------------------------------------

# Retrieve a list of list results on training and test data

# sets for each training epoch

#-----------------------------------------------------------

acc=history.history['accuracy']

val_acc=history.history['val_accuracy']

loss=history.history['loss']

val_loss=history.history['val_loss']

epochs=range(len(acc)) # Get number of epochs

#------------------------------------------------

# Plot training and validation accuracy per epoch

#------------------------------------------------

plt.plot(epochs, acc, 'r')

plt.plot(epochs, val_acc, 'b')

plt.title('Training and validation accuracy')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend(["Accuracy", "Validation Accuracy"])

plt.figure()

#------------------------------------------------

# Plot training and validation loss per epoch

#------------------------------------------------

plt.plot(epochs, loss, 'r')

plt.plot(epochs, val_loss, 'b')

plt.title('Training and validation loss')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend(["Loss", "Validation Loss"])

plt.figure()

# Expected Output

# A chart where the validation loss does not increase sharply!4. Week4

- 단어예측

- Laurences generated poetry 다운로드

- 문제(colab)

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.layers import Embedding, LSTM, Dense, Dropout, Bidirectional

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam

from tensorflow.keras import regularizers

import tensorflow.keras.utils as ku

import numpy as np

data = open('../dataset/sonnets.txt').read()

corpus = data.lower().split("\n")

tokenizer = Tokenizer()

tokenizer.fit_on_texts(corpus)

total_words = len(tokenizer.word_index) + 1

input_sequences = []

for line in corpus:

token_list = tokenizer.texts_to_sequences([line])[0]

for i in range(1, len(token_list)):

n_gram_sequence = token_list[:i+1]

input_sequences.append(n_gram_sequence)

max_sequence_len = max([len(x) for x in input_sequences])

input_sequences = np.array(pad_sequences(input_sequences, maxlen=max_sequence_len, padding='pre'))

predictors, label = input_sequences[:,:-1], input_sequences[:,-1]

label = ku.to_categorical(label, num_classes=total_words)

model = Sequential()

model.add(Embedding(total_words, 100, input_length = max_sequence_len-1))

model.add(Bidirectional(LSTM(150, return_sequences = True)))

model.add(Dropout(0.2))

model.add(LSTM(100))

model.add(Dense(total_words/2, activation='relu', kernel_regularizer=regularizers.l2(0.01)))

model.add(Dense(total_words, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

history = model.fit(predictors, label, epochs=10, verbose=1)

import matplotlib.pyplot as plt

import matplotlib.pyplot as plt

acc = history.history['acc'] # tf2에서는 accuracy, 현재는 버전이 꼬임

loss = history.history['loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'b', label='Training accuracy')

plt.title('Training accuracy')

plt.figure()

plt.plot(epochs, loss, 'b', label='Training Loss')

plt.title('Training loss')

plt.legend()

plt.show()

seed_text = "Help me Obi Wan Kenobi, you're my only hope"

next_words = 100

for _ in range(next_words):

token_list = tokenizer.texts_to_sequences([seed_text])[0]

token_list = pad_sequences([token_list], maxlen=max_sequence_len-1, padding='pre')

predicted = model.predict_classes(token_list, verbose=0)

output_word = ""

for word, index in tokenizer.word_index.items():

if index == predicted:

output_word = word

break

seed_text += " " + output_word

print(seed_text)5. 참고

'인공지능 & 머신러닝 > TensorFlow' 카테고리의 다른 글

| [TensorFlow] Colab 세팅하기 (0) | 2020.07.04 |

|---|---|

| [TensorFlow] Sequences, Time Series and Prediction (coursera) (0) | 2020.04.03 |

| [TensorFlow] Convolutional Neural Networks in TensorFlow (Coursera) (2) | 2020.03.31 |

| [TensorFlow] Introduction to TensorFlow...(Coursera) (0) | 2020.03.29 |

| [TensorFlow] 2.0 설치시 발생한 distutils 에러 해결 (0) | 2019.09.13 |

Comments